Tackling Shadow AI in Cloud Workloads

As enterprise adoption of cloud AI systems balloons, protecting them has become a priority. Shadow AI – the unsanctioned use of AI apps – has emerged as a particularly critical threat. Here we outline two best practices that can help you combat shadow AI in your cloud workloads.

Protecting your artificial intelligence systems against cyber attacks is a multifaceted endeavor, but at its foundation lies visibility. You need a full, continuously updated inventory of all your AI assets. Every unknown AI asset is a potential attack vector because its security flaws are unmanaged.

As the Cloud Security Alliance tells us in its “AI Organizational Responsibilities” report: “Addressing the challenge of shadow AI – unauthorized or undocumented AI systems within an organization – is needed for maintaining control, security, and compliance in AI operations.”

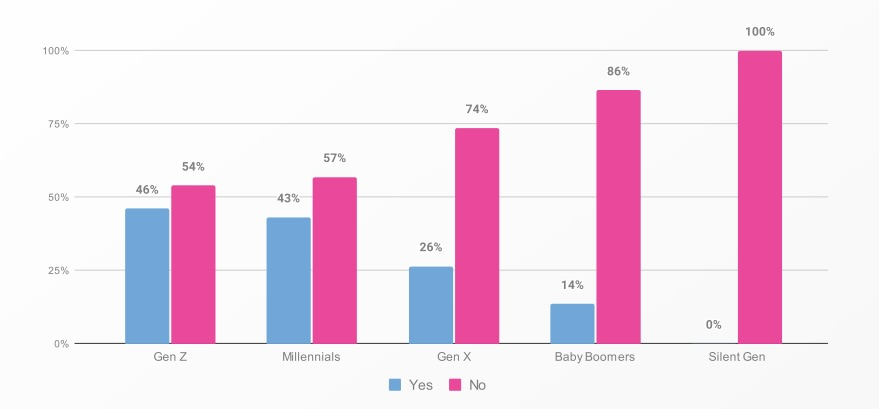

Unfortunately, the presence of these invisible AI assets is quite common. The main culprit: individual employees and teams who adopt AI tools without informing the IT department. Various reports, including ones from Software AG and from Salesforce, estimate that about half of employees use unapproved AI tools at work.

In its report “Oh, Behave! The Annual Cybersecurity Attitudes and Behaviors Report 2024-2025,” the National Cybersecurity Alliance (NCA) found that almost 40% of employees had fed company data to AI tools without their organization’s approval.

Have you ever shared sensitive work information with AI tools without your employer’s knowledge?

(Source: “Oh, Behave! The Annual Cybersecurity Attitudes and Behaviors Report 2024-2025” study by the National Cybersecurity Alliance, September 2024, based on a survey of 1,862 respondents from the U.S., Canada, U.K., Germany, Australia, New Zealand and India.)

And the shadow AI impact is having real consequences. In its “AI Barometer: October 2024” report, market researcher Vanson Bourne found that shadow AI made it harder for 60% of organizations surveyed to control data governance and compliance.

To what extent do you think the unsanctioned use of AI tools is impacting your organisation's ability to maintain control over data governance and compliance?

(Source: Vanson Bourne’s “AI Barometer: October 2024”)

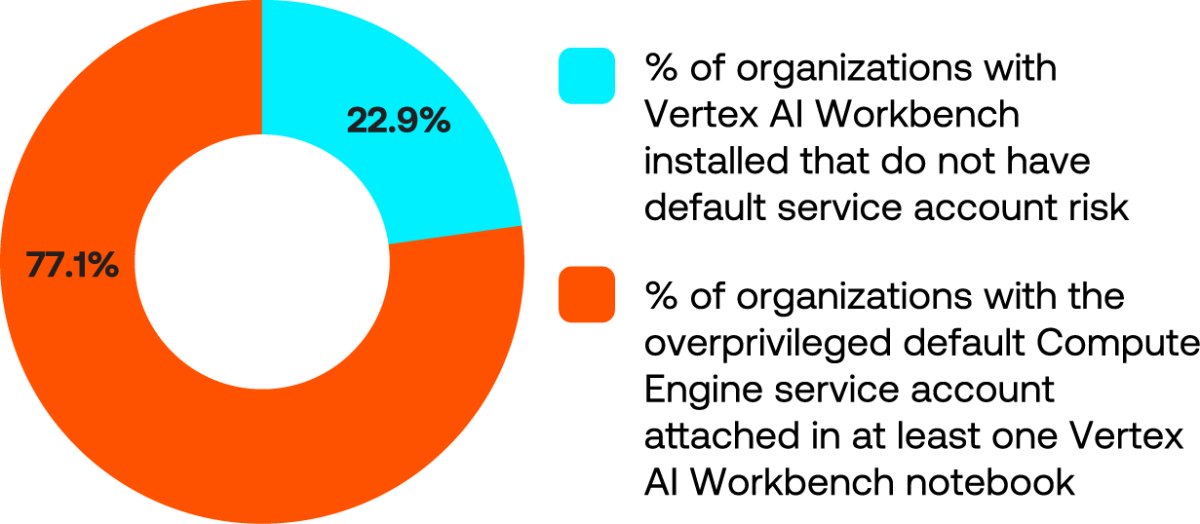

Meanwhile, as our “Tenable Cloud AI Risk Report 2025” shows, weak and default configurations abound in deployed cloud-based AI services. Based on telemetry from public-cloud and enterprise workloads scanned with Tenable products between December 2022 and November 2024, the report found that:

- 91%, of the organizations with Amazon SageMaker set up had the risky default of root access (i.e. administrator privileges) in at least one notebook instance — enabling users to change system-critical files including those contributing to the AI model.

- 14% of organizations using Amazon Bedrock did not explicitly block public access to at least one AI training bucket — and 5% had at least one overly permissive bucket.

- 77% of organizations had the overprivileged default Compute Engine service account configured in at least one Google Vertex AI Workbench notebook. All services built on this default Compute Engine are at risk.

(Source: “Tenable Cloud AI Risk Report 2025,” March 2025)

In this blog, we offer you two ways to address the shadow AI threat in your organization.

Best practice #1: Gain visibility into all AI and ML cloud assets

- Best practice: Identify all cloud AI services and resources (e.g., machine learning instances, AI APIs, training data storage) being used in your environment.

- Action: Use cloud provider dashboards or a cloud-native application protection platform (CNAPP) tool to list any AI/ML services running, such as AWS SageMaker jobs, Azure Cognitive Services, GCP Vertex AI and more. Tag or inventory these assets to know what you have.

- Value: Immediate visibility ensures you’re aware of every AI workload. You can’t protect what you don’t know exists — this step uncovers any unmanaged AI assets that might be introducing unseen risk.

Best practice #2: Tighten access controls and AI entitlements

- Best practice: Lock down who and what can access AI models, APIs and data. Apply the principle of least privilege to user accounts and service roles interacting with AI resources.

- Action: Review and remove any overly broad IAM roles or API keys with excessive permissions to AI services. Implement role-based access so only specific teams or applications can invoke an AI model or read its data. Use cloud identity tools or cloud infrastructure entitlement management (CIEM) features to auto-detect and remediate excessive privileges related to AI.

- Value: Restricting access greatly reduces the risk of unauthorized usage or data exposure. You can close glaring permission gaps, preventing, for example, an untrusted account from siphoning sensitive data or a well-intentioned but unauthorized employee from spinning up a new AI service.

Conclusion

The Tenable Cloud Security CNAPP offers a series of capabilities that help mitigiate the threat of shadow AI in your cloud workloads, including AI security posture management (AI-SPM), data security posture management (DSPM) and cloud infrastructure entitlement management (CIEM) capabilities. The platform automatically discovers AI assets and sensitive data across clouds, enforces best-practice configurations and least privilege, and continuously monitors for risk at enterprise scale.

To get more information, visit the Tenable Cloud Security home page and request a demo.

- Cloud