How Smart Secrets Storage Can Help You Avoid Cloud Security Risks

The not-so-sensitive locations that may tempt you when storing sensitive information — why to avoid them and how.

In our work with cloud environments, we frequently review the security posture of real-life environments and come upon surprisingly concerning findings. Today’s topic is the storing of secret information in ways that might put it at risk.

When developing and deploying software, engineers often need to use strings that are sensitive, such as passwords, database connection strings, access tokens and API keys. Cloud vendors invest a lot of resources in providing convenient and easy tools that help ensure the secure use and storing of such secrets. The problem is when these tools are not used, increasing the risk of severe mistakes and unnecessary exposure of sensitive information.

In this post we review the risky locations that we’ve seen used to store sensitive data in Amazon Web Services (AWS) environments. Note that we collected this data without the need for access to sensitive information. Most of the findings were made using access to an AWS managed policy, SecurityAudit, which is commonly provided to an environment’s auditors — and even third-party applications over which the account owner has little control.

Storing APIs and other secrets

Before diving into the problem, let’s discuss the desired best practice. When you use very sensitive information, such as API keys (which can be used to impersonate an account and/or perform actions on the account owner’s behalf), it’s best to store it encrypted and with access controls that allow you to hand pick who can access it. The last thing you want is to store the sensitive data in plaintext in a location to which access can — and is — often granted for other purposes.

AWS offers a convenient service called AWS Secrets Manager that allows you to store, rotate and easily access secret strings. There are other tools you may use for this purpose — either natively in or external to AWS — but, for simplicity's sake, we’ll focus on AWS Secrets Manager to show how simple it is to avoid secrets storing risk. AWS Secrets Manager uses key-value pairs, each holding a secret string, and lets you control, using identity and access management (IAM) and/or resource-based policies, which identities can access the string.

Managing access to these secrets (which are essentially AWS resources) is very important. Doing so is, of course, much easier when the entire purpose of the resource is, as is the case here, storing the secret.

Let’s look at the risky locations where we found sensitive strings stored.

Storing secrets in environment variables

When you set up a task definition in AWS’s Elastic Container Service you can define environment variables, which are a convenient way to place information that can eventually be used by code running in the container. Of course, this convenience has not eluded developers who, due to their need to use sensitive strings in their code, are motivated to store the strings in environment variables. Doing so is extremely bad practice as any identity that has access to perform describe-task-definition can read the configuration of the task definition, including the value of the environment variables. The identity would require the permission ecs:DescribeTaskDefintion which, for example, is part of the SecurityAudit policy, as it allows the actions included in ecs:Describe*. As a side note, storing secrets in environment variables is also bad practice operationally since updating the value of the environment variable requires you to create a new revision of the task definition — making it much more difficult to perform the important security practice of rotating the values of the secrets.

AWS offers a “valueFrom” option that lets you specify “secrets” as part of the task definition parameter and configure their value to be read from a Secrets Manager secret. As per AWS documentation, you will need to grant the Elastic Container Service (ECS) execution role access to the secret’s value and, if the encryption is done using a custom Key Management Service (KMS) key, permission to perform kms:Decrypt on that key. However, once you have configured the secret’s value you can change it without creating a new revision of the task definition, protecting the sensitive asset from any identity that does not have access to these permissions.

Note that since ECS task definition revisions cannot be deleted, once a secret is exposed via a task definition configuration it will remain exposed, forcing you to rotate the secret (which is in any case a good idea).

Secrets Manager in Lambda function

Another computing resource in which environment variables are used to be easily accessible by code are Lambda functions. When it comes to Lambda, by default, environment variables are server-side encrypted using a KMS key managed by AWS. However, this encryption doesn’t make it any harder to gain IAM access to read the environment variable. Any identity with the permission lambda:GetFunctionConfiguration, which allows the performing of get-function-configuration, will be able to read the contents of the variables. You can resolve this exposure risk by using KMS encryption or the AWS Secrets Manager.

Lambda allows you to use a customer-managed KMS key. Users with access to lambda:GetFunctionConfiguration and without access to kms:Decrypt on the key won’t be able to see the environment variables in plaintext (they will, however, if they have access to use the key for decryption). In addition, you can even configure the variables to be encrypted “in transit,” meaning that they will arrive in ciphertext to the Lambda runtime and therefore need to be decrypted as part of your code. This requires the Lambda execution role to have the IAM permission kms:Decrypt on the key (the Lambda console provides a template IAM policy for configuring this, along with code snippets for decrypting the ciphertext). You can find more information here on securing Lambda environment variables using encryption.

However effective it is to use KMS for encrypting environment variables, we still recommend that you use Secrets Manager secrets to manage access to sensitive data used by code running in Lambda functions. To do so, you simply need to store the sensitive value as a secret in Secrets Manager and enable the Lambda’s execution role to access it using the following policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "secretsmanager:GetSecretValue",

"Resource": "<SECRET_ARN>"

}

]

}This policy makes it possible to access the value of the secret in the code executed by the Lambda function. For example, in node js you can use this implementation from the awsdocs github and this example of using it by Avinash Dalvi’s post on dev.to.

Elastic Compute Cloud (EC2) user data secrets exposure

As described in AWS documentation: “When you launch an instance in Amazon EC2, you have the option of passing user data to the instance that can be used to perform common automated configuration tasks and even run scripts after the instance starts.”

While a great way to automate the launch of scripts at launch, EC2 user data is yet another place where we have seen secrets left unnecessarily exposed. For example, if you need to use a password as part of your script you might be tempted to store it in plaintext in the script you input to the user data. Doing so will expose the secrets to anyone with the permission ec2:DescribeInstanceAttribute.

As Karol Filipczuk describes in his blog, Secrets Manager can be a good solution for this risky exposure as well. By allowing the role enabled for the EC2 instance to have access to the value of a secret, you can access that value in the bash script and use it in various ways depending on your needs, e.g., store it in an environment variable or write it to a file. This method will keep the sensitive string out of the actual user data, which is accessible to any identity that can read the EC2 attributes, while still allowing you to use it when needed.

Secrets exposed in tags

You may be surprised to learn that, in some environments, developers store sensitive keys such as AWS access keys and database passwords in tags placed on resources such as EC2 instances. This act leads to exposure of this sensitive information to any identity with access to ec2:DescribeTags, a permission not considered that sensitive.

The odd thing is that to access the value within the EC2 instance, you have to use the describe-tags function; for example, by a call to AWS’s Command Line Interface (CLI) or an alternative API. Since accessing the secret is as simple as making a CLI call, using this method is only slightly easier than storing the sensitive value in a Secrets Manager secret (and, in some aspects, is possibly more cumbersome as you have to change the user data when its value changes). The only real “advantage” to using the less secure method is that you don’t have to manage the permissions for the IAM role used by the EC2 instance that allows it to access the secret and you are saving the cost of storing the secret. These benefits certainly aren’t worth risking exposure of sensitive information.

Plaintext SSM parameter

Many consider the AWS System Manager (SSM) Parameter Store to be a good tool for storing various strings — and we concur. However, when it comes to sensitive information, it’s best to utilize the Parameter Store feature that allows the storing of strings of the SecureString type, by means of a customer-managed KMS key. Using this approach, even if a principal has wide range access (even to all resources) to perform ssm:GetParameter*, if it does NOT have access to kms:Decrypt using the KMS key used to encrypt the parameter, it won’t be able to access the parameter’s value.

On quite a few occasions we’ve run into sensitive strings stored in parameters of type “String.” These configurations would allow identities with the ability to perform ssm:GetParameter on all resources in the account to access the sensitive strings as well.

Secrets misconfiguration findings

These findings indicate that when granting third-party access to an environment it’s very possible to unintentionally grant access to, say, read database connection strings stored in a risky location. This can also mean that the fallout from a breach in which an identity is compromised will be much greater as the malicious actor will be able to gain access to valuable assets.

Once we realized that secrets misconfiguration is more common and versatile than we thought, we leveraged the Tenable Cloud Security engine to find strings likely to be sensitive due to their contents and/or format and that are stored insecurely. We started displaying these findings on our platform with simple guidance as to how to resolve the issue.

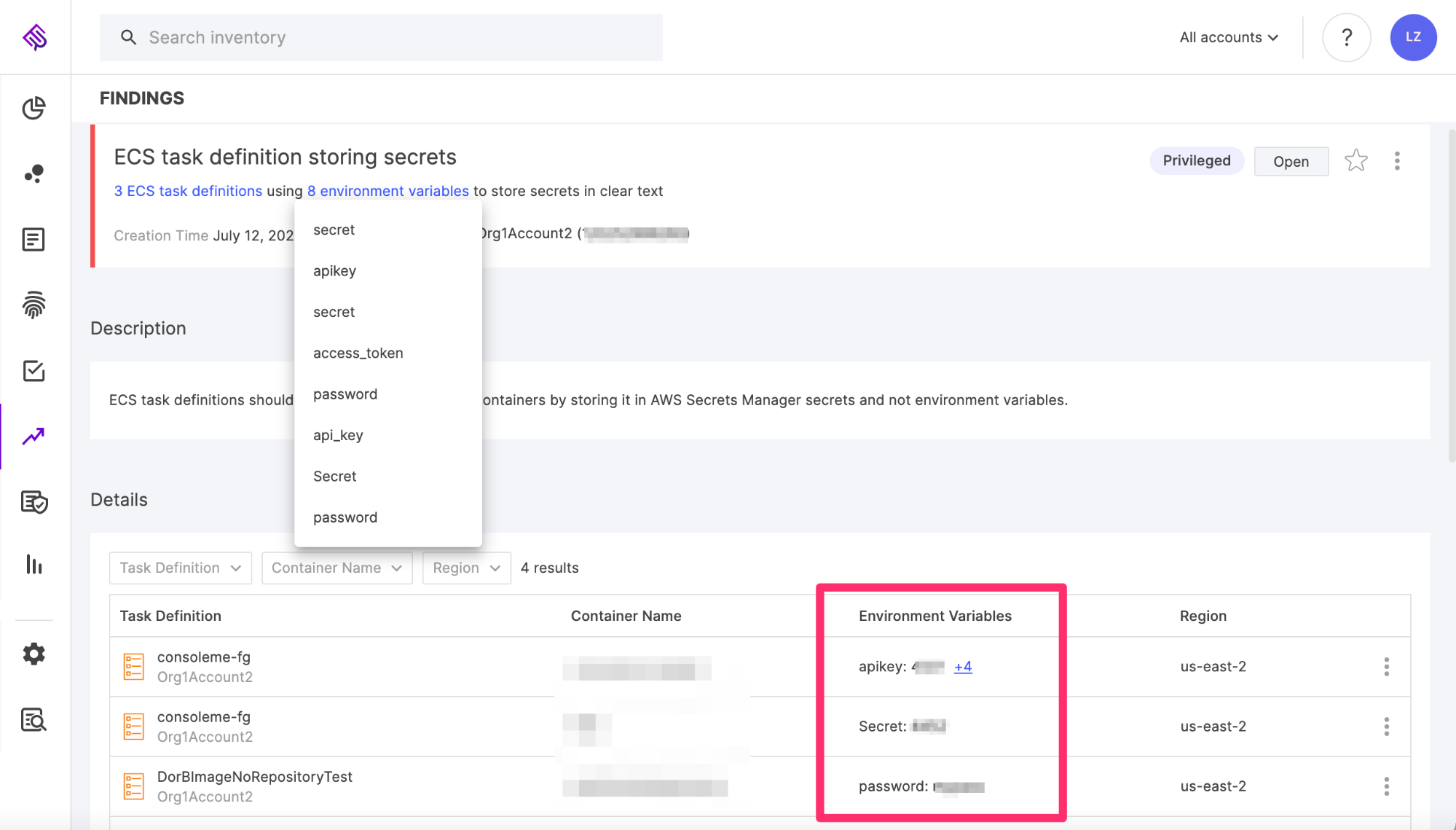

For example, when the analysis encounters ECS task definitions that hold sensitive strings in plaintext in their environment variables, the platform presents a finding that looks like this:

Figure 1: Finding indicating sensitive strings held in plaintext in ECS task definitions’ environment variables

Image source: Tenable

The findings display allows you to see precisely what sensitive information is held and where, and guides you in how to migrate the environment variables to AWS Secrets Manager. In this particular case, since task definition revisions cannot be deleted, it also instructs you to rotate the value of the secrets.

Click here to get a demo if you are interested in learning more.

- Cloud