Securing Big Data so You’re Not Fooled by Bogus Information

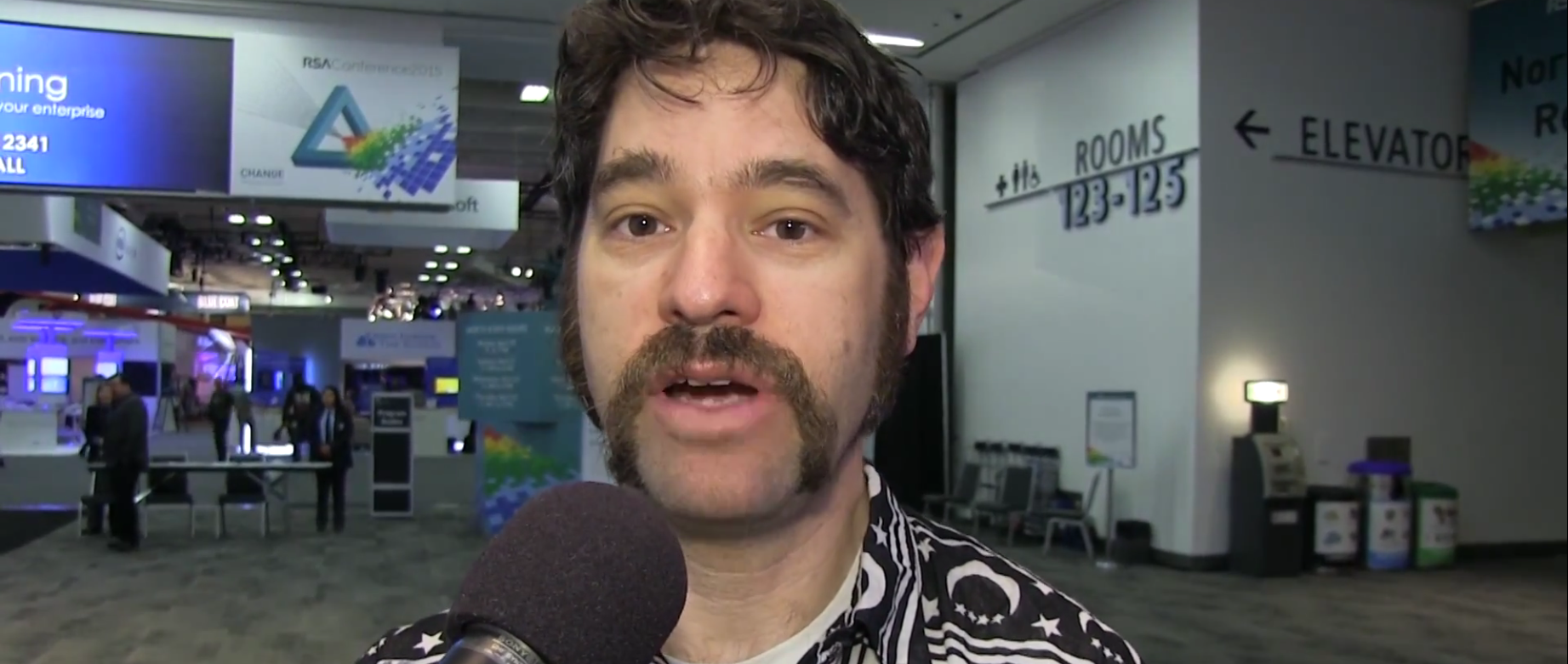

“When data gets really big, really massive scale, it becomes really hard to secure, because you’re talking about breaking down a lot of assumptions we used to have. The perimeters disappear, you go to truly distributed truly wide scale, and it’s just so large and you don’t want to slow it down because that’s where its best benefits are, performance,” said Davi Ottenheimer (@daviottenheimer), Senior Director of Trust at EMC, in our conversation at the 2015 RSA Conference in San Francisco.

Ottenheimer believes we’re failing to protect Big Data by using old security techniques: “They’re falling back on the perimeter… We should be moving to a direction of textual control where you can only see the very little bits of information you need to.”

There’s a sense that big data has some sort of resilience that will be solved by the sheer volume of information. In reality though, there can be a lot of bogus information, said Ottenheimer, and crunching that information can yield what appears to be a valid result.

“You end up with an assumption that the technology is so great, it’s so impressive, it’s doing its magic that you can just trust it implicitly,” said Ottenheimer. “And that’s very dangerous because we find out later that it made a mistake or there’s some mystery inside or there’s a bug and we didn’t account for that.”

To know whether it’s bogus or not, Ottenheimer suggests falling back on CIA (confidentiality, integrity, and availability).

Related Articles

- Conferences